All of this series is mainly based on the Machine Learning course given by Andrew Ng, which is hosted on cousera.org.

Recommender System

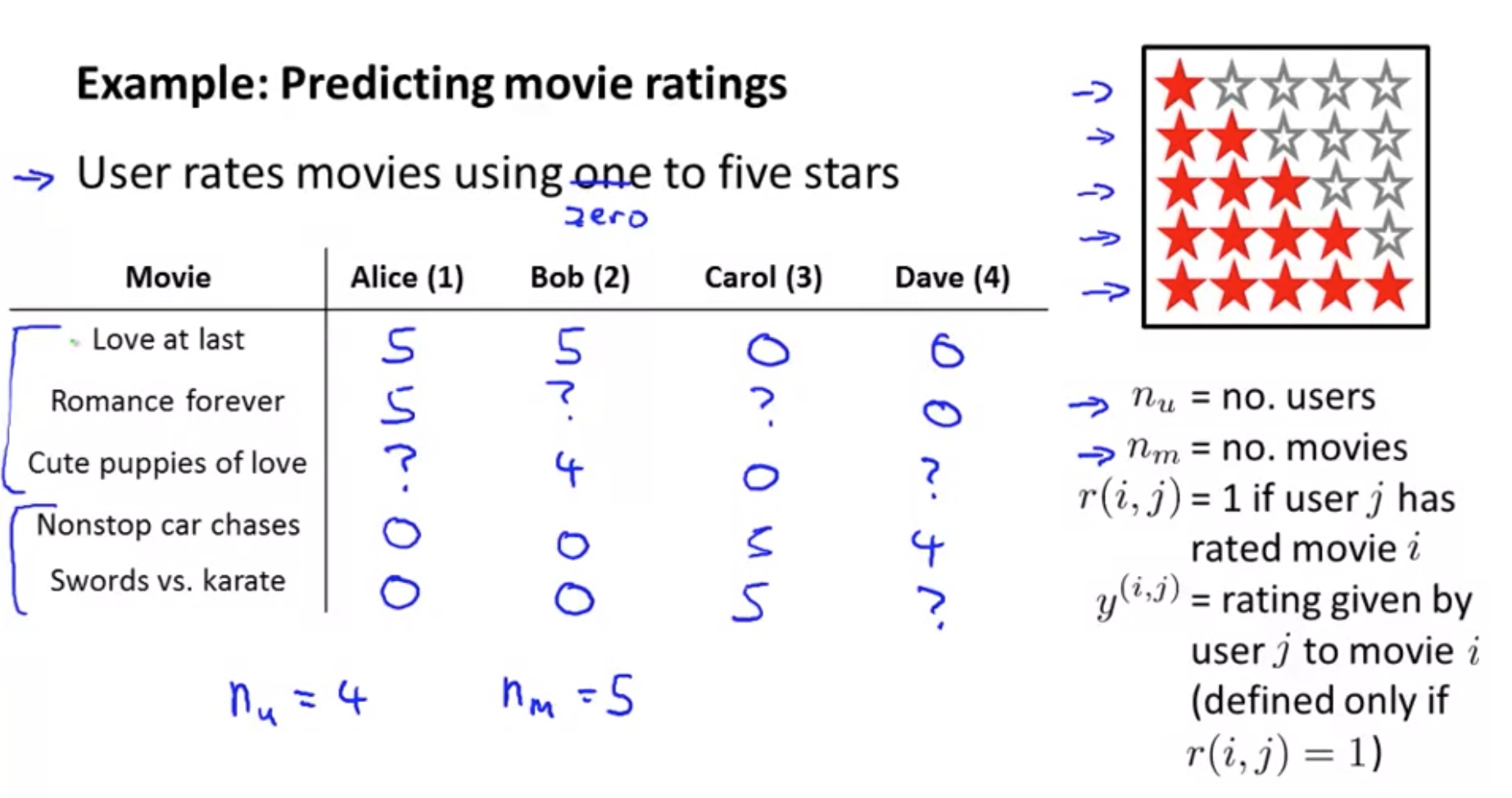

Predicting Movie Rating

Content Based Recommender system

Note: For each movie i, we have some features, with $x^{(i)}_0=1$.

Problem Formulation

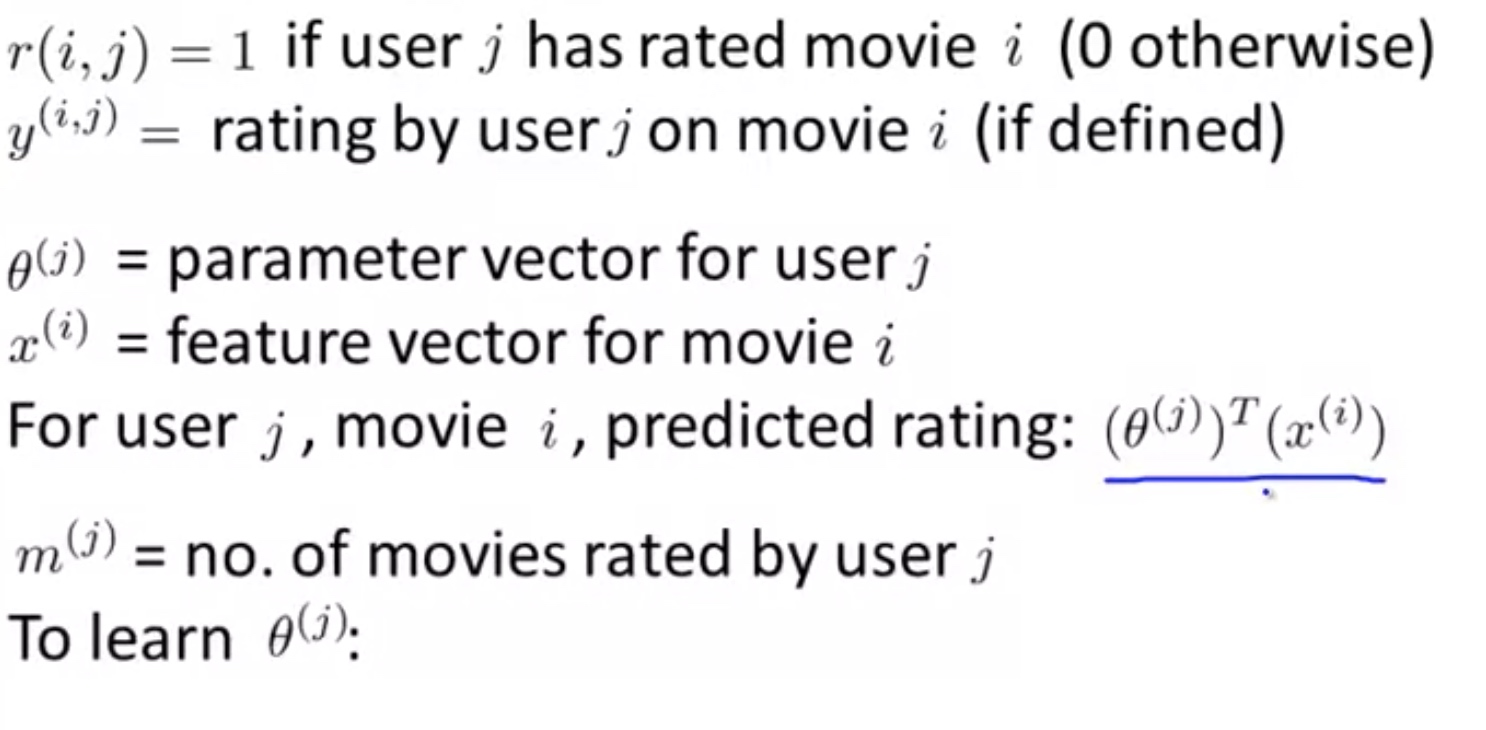

Optimization Objective To learn $\theta^{(j)} $ (parameters for user j):

\[\min _{\theta(j)} \frac{1}{2} \sum_{i: r(i, j)=1}\left(\left(\theta^{(j)}\right)^{T} x^{(i)}-y^{(i, j)}\right)^{2}+\frac{\lambda}{2} \sum_{k=1}^{n}\left(\theta_{k}^{(j)}\right)^{2}\]To learn $\theta^{(j)}, \forall j$:

\[\min _{\theta^{(1)}, \ldots, \theta^{\left(n_{u}\right)}} \frac{1}{2} \sum_{j=1}^{n_{u}} \sum_{i: r(i, j)=1}\left(\left(\theta^{(j)}\right)^{T} x^{(i)}-y^{(i, j)}\right)^{2}+\frac{\lambda}{2} \sum_{j=1}^{n_{u}} \sum_{k=1}^{n}\left(\theta_{k}^{(j)}\right)^{2}\]My Thoughts: After we get the $\theta \in R^{n+1}$ for each user, we can try to use PCA to divide the user into a limited number of representative groups: $Group_g,g=1,….,G$. Since for these group, we can have more ratings available, we can get more information about this group, and derive better $\theta$ for each of these group. After this, we find the approximation to $user_j$: which is a linear combination of $Group_g,g=1,….,G$. The r(i,j) is then approximated by the linear combination of the rating for the groups.

Collaborative Filtering

A algorithm which can find which feature to use. After we already get the $\theta$ for the user, based on their ratings on a movie, we can estimate the features of the movie.

Optimization Algorithm

\[\begin{array}{l}{\text { Given } \theta^{(1)}, \ldots, \theta^{\left(n_{u}\right)}, \text { to learn } x^{(i)}:} \\ {\quad \min _{x^{(i)}} \frac{1}{2} \sum_{j: r(i, j)=1}\left(\left(\theta^{(j)}\right)^{T} x^{(i)}-y^{(i, j)}\right)^{2}+\frac{\lambda}{2} \sum_{k=1}^{n}\left(x_{k}^{(i)}\right)^{2}}\end{array}\]Further, we can learn the features $x_{k}$ for all the movies.

\[\begin{array}{l}{\text { Given } \theta^{(1)}, \ldots, \theta^{\left(n_{u}\right)}, \text { to learn } x^{(1)}, \ldots, x^{\left(n_{m}\right)}:} \\ {\qquad \begin{array}{l}{\min _{x^{(1)}, \ldots, x^{\left(n_{m}\right)}} \frac{1}{2} \sum_{i=1}^{n_{m}} \sum_{j: r(i, j)=1}\left(\left(\theta^{(j)}\right)^{T} x^{(i)}-y^{(i, j)}\right)^{2}+\frac{\lambda}{2} \sum_{i=1}^{n_{m}} \sum_{k=1}^{n}\left(x_{k}^{(i)}\right)^{2}}\end{array}} \\ \end{array}\]

Collaboration between users in the sense of better estimation of features: every user gives the system their preference, with which the system learn better feature x_i, and then further helping make the estimation of preference for each user.

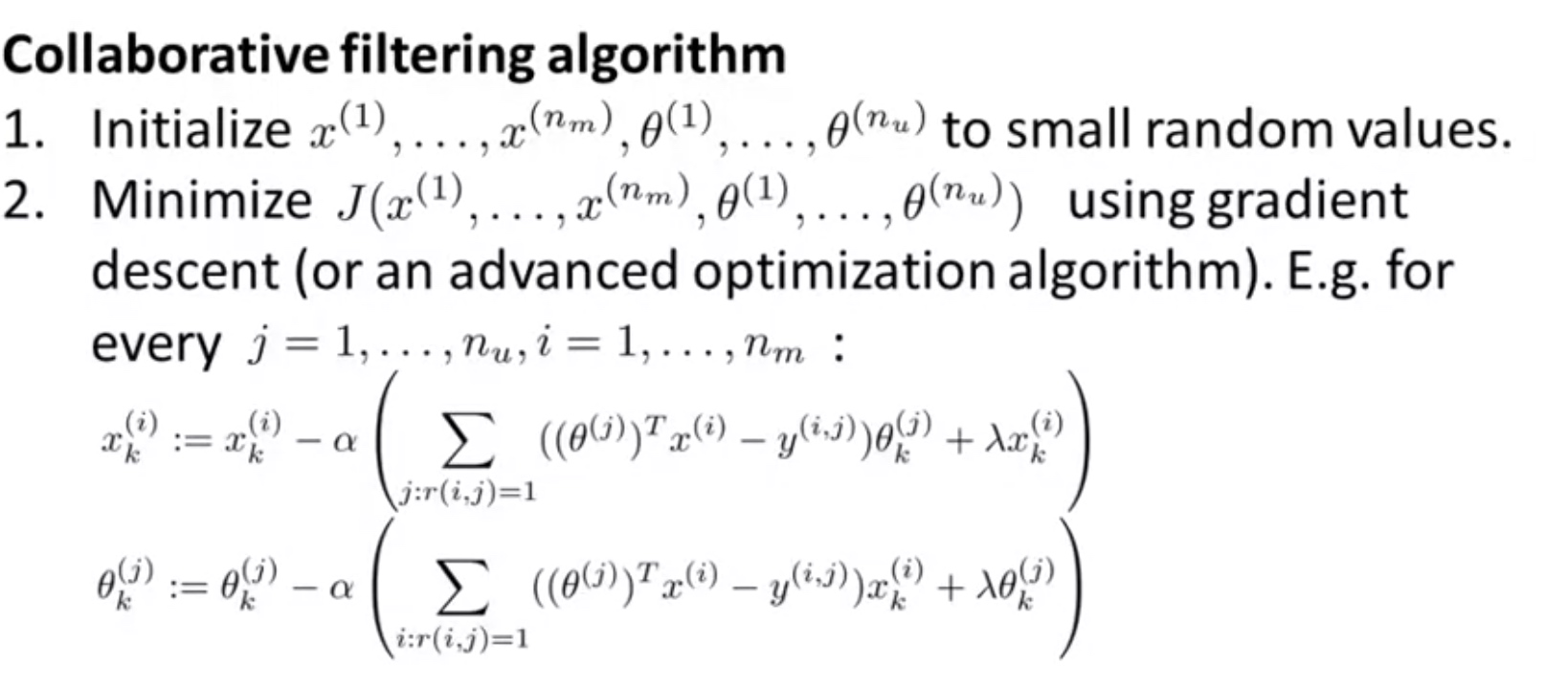

Algorithm for Collaborative Filtering

$\text { Given } x^{(1)}, \ldots, x^{\left(n_{m}\right)}, \text { estimate } \theta^{(1)}, \ldots, \theta^{\left(n_{u}\right)}:$

\[\min _{\theta^{(1)}, \ldots, \theta^{\left(n_{u}\right)}} \frac{1}{2} \sum_{j=1}^{n_{u}} \sum_{i: r(i, j)=1}\left(\left(\theta^{(j)}\right)^{T} x^{(i)}-y^{(i, j)}\right)^{2}+\frac{\lambda}{2} \sum_{j=1}^{n_{u}} \sum_{k=1}^{n}\left(\theta_{k}^{(j)}\right)^{2}\]${\text { Given } \theta^{(1)}, \ldots, \theta^{\left(n_{u}\right)}, \text { to learn } x^{(1)}, \ldots, x^{\left(n_{m}\right)}:}$

\[\min _{x^{(1)}, \ldots, x^{\left(n_{m}\right)}} \frac{1}{2} \sum_{i=1}^{n_{m}} \sum_{j: r(i, j)=1}\left(\left(\theta^{(j)}\right)^{T} x^{(i)}-y^{(i, j)}\right)^{2}+\frac{\lambda}{2} \sum_{i=1}^{n_{m}} \sum_{k=1}^{n}\left(x_{k}^{(i)}\right)^{2}\]We combine this process: $\text { Minimizing } x^{(1)}, \ldots, x^{\left(n_{m}\right)} \text { and } \theta^{(1)}, \ldots, \theta^{\left(n_{u}\right)} \text { simultaneously: }$

\[J\left(x^{(1)}, \ldots, x^{\left(n_{m}\right)}, \theta^{(1)}, \ldots, \theta^{\left(n_{u}\right)}\right)=\frac{1}{2} \sum_{(i, j): r(i, j)=1}\left(\left(\theta^{(j)}\right)^{T} x^{(i)}-y^{(i, j)}\right)^{2}+\frac{\lambda}{2} \sum_{i=1}^{n_{m}} \sum_{k=1}^{n}\left(x_{k}^{(i)}\right)^{2}+\frac{\lambda}{2} \sum_{j=1}^{n_{u}} \sum_{k=1}^{n}\left(\theta_{k}^{(j)}\right)^{2}\]The optimization problem:

\[\min_{x,\theta} J(x,\theta)\]where: $x=x^{(1)}, \ldots, x^{\left(n_{m}\right)}$ $\theta=\theta^{(1)}, \ldots, \theta^{\left(n_{u}\right)}$

Note: when we combine the two learning process, we can get rid of $x^{(i)}_0=1$. This is because the features can be learned during the process. If the algorithm really thinks a constant feature is needed, it will make one by itself during the iteration.

In conclusion:

Note:

- here $x,\theta \in R^n$ without the constant term.

- The final predicted star rating is $\theta^T x$

- Initialization of the x and $\theta$ is for the purpose of breaking the symmetry and ensuring the learned $x_i$ and $\theta_j$ are different.

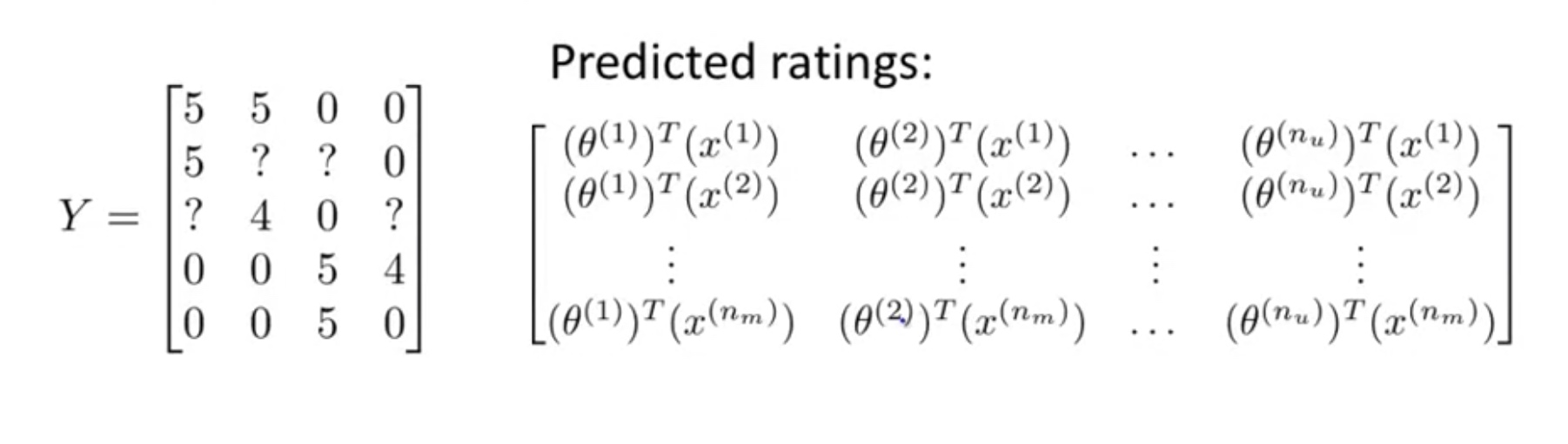

Implementation/vectorization of Collaborative Filtering–Low Rank matrix Factorization

For the collaborative filtering, we basically deal with the problem of finding parameters given the result.

In other words:

$Y= X^T *\theta $ where $\Theta=[{\theta^{(1)}},\theta^{(2)},…,\theta^{(n_u)}]$ $X=[{x^{(1)}},x^{(2)},…,x^{(n_m)}]$

Mathematics about Low-Rank matrix factorization

$\min |\boldsymbol A - \boldsymbol UV^{T} |_2 \text{, subject to}~ rank(\boldsymbol UV^{T}) \leq r), where (|\cdot|_2$ denotes the Frobenius norm.

We start with the basic MF model, formulated as:

\[\min _{\mathbf{U}, \mathbf{V}}\left\|\mathbf{X}-\mathbf{U} \mathbf{V}^{T}\right\|+\mathcal{L}(\mathbf{U}, \mathbf{V})\]where $X\in R^{m\times n}$ is the data matrix to be approximated, and $U\in R^{m\times k},V\in R^{n\times k}$ are two low-dimensional matrices ($k«min(m,m)$), $\mathcal{L}(U,V)$ is a regularization part to avoid overfitting.

Implementation/vectorization of Collaborative Filtering–Mean Normalization

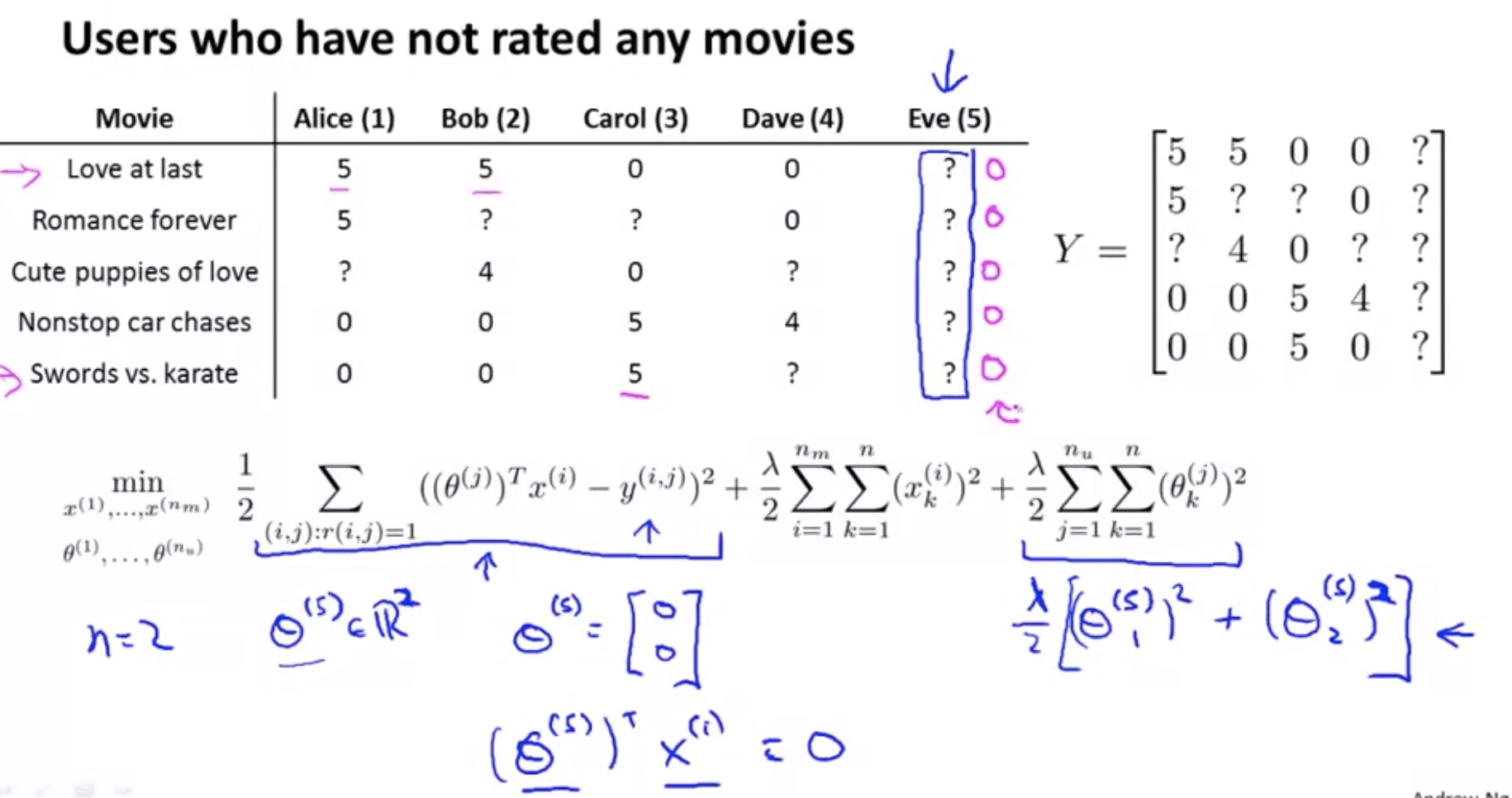

If we do not have a mean normalization process, then for a guy that gives no rating, the $\theta$ will be set to be 0. In this case, no recommendation can be made, as the predicted ratings will be 0 for any movie.

Implementation of mean normalization

$Y=\left[\begin{array}{lllll}{5} & {5} & {0} & {0} & {?} \ {5} & {?} & {?} & {0} & {?} \ {?} & {4} & {0} & {?} & {?} \ {0} & {0} & {5} & {4} & {?} \ {0} & {0} & {5} & {0} & {?}\end{array}\right]$

then we can compute the average rating: $\mu=\left[\begin{array}{c}{2.5} \ {2.5} \ {2} \ {2.25} \ {1.25}\end{array}\right]$

bsfun(@minus,Y,mu):

\[Y=\left[\begin{array}{ccccc}{2.5} & {2.5} & {-2.5} & {-2.5} & {?} \\ {2.5} & {?} & {?} & {-2.5} & {?} \\ {?} & {2} & {-2} & {?} & {?} \\ {-2.25} & {-2.25} & {2.75} & {1.75} & {?} \\ {-1.25} & {-1.25} & {3.75} & {-1.25} & {?}\end{array}\right]\]Now for user j and movie i, the predicted rating is: $<\theta^{(j)},x^{(i)} >+\mu_i$

In this case, even for the user who has not given any ratings, the ratings can be predicted as $0+\mu$.

Comments